AI: Achievements and Challenges 2025

- Greg Port

- Jan 1

- 5 min read

The year 2025 was another year dominated by AI in the education technology space. If you were weary of the endless stream of new tools, ideas, workshops, and webinars on the topic, the only sensible option may have been to stay in bed for the year.

Looking back at the promises made when ChatGPT launched in November 2022, it’s hard not to conclude that the impact so far has been more modest than the hype. That shouldn’t surprise us. Systemic change in education is slow by nature, and despite the noise, the fundamental structures of schooling remain largely untouched — for now.

That gap between promise and practice matters. Not because AI lacks potential, but because how we respond to it will determine whether it deepens learning or merely adds another layer of distraction. The real question isn’t whether AI will reshape education, but whether we are willing to redesign learning in ways that genuinely raise the level of thinking.

Well-designed learning keeps human judgment firmly in charge. AI can assist, accelerate, and extend learning, but it should never do the intellectual heavy lifting students need in order to grow. Skills like reasoning, sense-making, collaboration, communication, and critical thinking don’t disappear in an AI-rich world — they become even more essential.

When a task allows technology to do the thinking, the task is flawed. When a task requires students to analyse, weigh options, defend choices, and take ownership of outcomes — even with AI present — learning is deepened.

Preparing students for the intelligence age depends far more on thoughtful instructional design than on monitoring tools or compliance rules. The future of learning will be shaped by how well we design for thinking, not by how tightly we try to control the tools.

Here are some principles I developed this year for AI-enabled assessment:

For and against

It’s also important to engage seriously with views that challenge our own. Social media bubbles make confirmation bias easy to spot in others and far harder to recognise in ourselves.

Dan Meyer, a thoughtful and tech-positive voice in education, has offered some of the most compelling cautions about AI use in classrooms. His writing on chatbots raises an uncomfortable but necessary question: do students actually want to learn from an AI chatbot?

Personalised learning has long sat on education’s wish list, and AI offers possibilities we’ve never had before. In theory, intelligent tutoring systems could approach the kind of individualised support associated with the “two sigma” effect. But if our goal is simply better performance on content-heavy tests, that’s a different conversation altogether.

Meyer’s skepticism is grounded in something deeper. Education is inherently relational. Students learn from teachers, and from one another, within a social structure that supports not just academic growth but social and emotional development. While the "grammar of schooling" — timetables, class sizes, fixed curricula — deserves critique, it also creates the conditions for relationships that matter.

No matter how responsive or Socratic an AI chatbot becomes, it cannot replace that relational core.

This isn’t an argument against using chatbots in learning design. It’s an argument for keeping the teacher-student relationship front and centre.

If AI strengthens that relationship, we’re on the right path. If it weakens or displaces it, we should pause and rethink.

In a recent debate about whether schools should integrate AI now to drive improvement, two clear positions emerged.

The case for integrating AI now focused on urgency and equity. AI is already shaping every major industry, and students are using it extensively outside school. Proponents argued that schools have a responsibility to prepare students for the world they will enter by 2035 — not by ignoring AI, but by teaching critical literacy, ethical use, and thoughtful application. They framed AI as a teacher-centred support, not a replacement: a way to reduce administrative load, enable differentiation, and provide more timely feedback, provided humans remain firmly in the loop.

The case against rapid integration was more cautious. Opponents questioned whether the evidence base is strong enough to justify large-scale, near-term adoption. They pointed to decades of educational technology promises that failed to shift core outcomes and warned about opportunity cost — particularly in a system already stretched for time, trust, and capacity. There was also a craft concern: if AI takes over cognitively demanding work like lesson planning or evaluating student thinking, teacher development — especially for early-career teachers — may suffer. The conclusion wasn’t “never,” but “not yet.” Experiment carefully, study impact rigorously, and prioritise interventions with a strong evidence base.

I find myself somewhere between these positions.

I believe teaching AI fluency is urgent, but we should be guarded about full immersion until we gather more evidence.

Tools

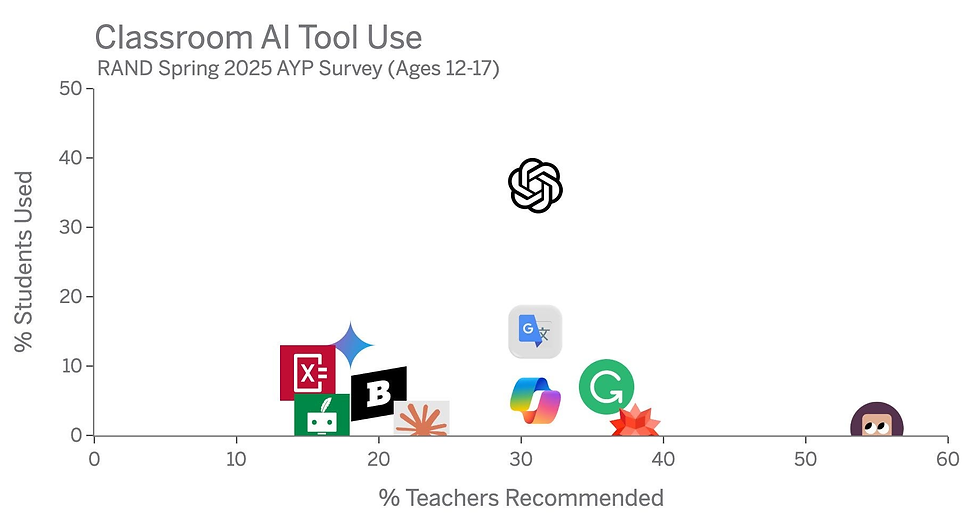

With Microsoft Copilot now available to students aged 13 - 17, we can use a general AI tool uniformly, with sign-in using their school account and enterprise data protections. It provides a safe a consistent way so teachers can ensure equitable access. It will be interesting to see if the trend seen in the graph of US data still holds, that despite teacher recommendations ChatGPT remains the tool of choice for students (that is Kahnmigo at the end there!).

Source: https://danmeyer.substack.com/

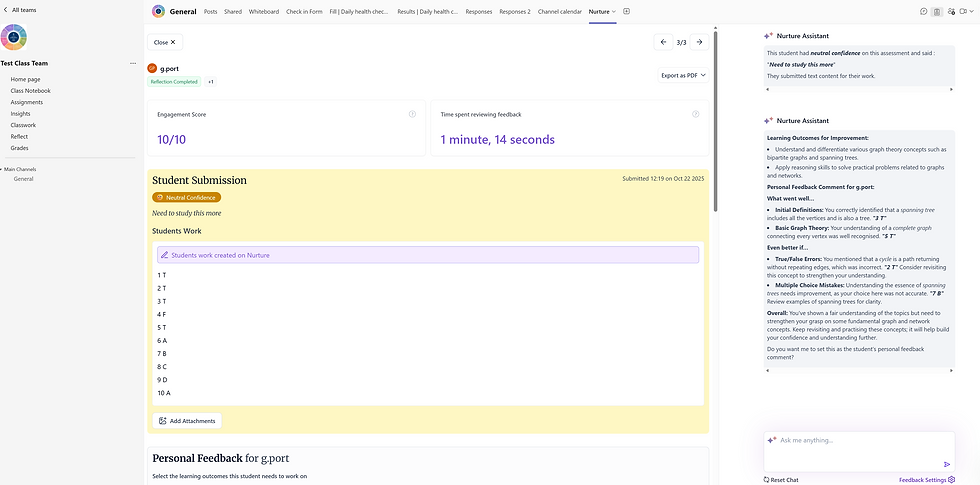

Another new tool we are integrating is Nurture AI - a Teams-based add-in that allows for AI-driven student feedback on formative tasks. This is an area that is impossible for a teacher to fully engage with given time constraints, so it will be interesting to see the student interaction and uptake.

Our AI bot ASCENT https://ascent.ascollege.wa.edu.au/ was designed to support staff in curriculum design by integrating the WA Curriculum, Melbourne Metrics capabilities and our College pedagogies. It has proved to be outstanding and I believe the personalisation to our context holds the key to the effectiveness of the responses we get.

Of course you would be hard pressed to find an Ed Tech tool that is NOT using AI somewhere. For our suite of tools (too numerous to mention but including Mathspace, Stile, Education Perfect, Atomi, Quizlet, Canva and many more) these enhancements make the products easier to use and more effective for students and staff.

Expansion of AI in Administrative and Support Roles

One of the most successful workshops I ran this year involved sharing ideas for school admin staff on the use of AI in their role. These ideas can significantly improve the quality and agility of these vital support staff.

AI chatbots are excellent for addressing student inquiries about assignments, deadlines, and campus resources. Operating 24/7, they enhance communication and significantly reduce administrative time. Implementing an AI bot to improve search functionality on our public website and intranet for staff, students, and parents would be a valuable addition.

Key innovations in 2025

Looking Ahead: Opportunities for 2026 and Beyond

Looking ahead, the real opportunities lie not in chasing the next tool, but in designing learning experiences that make thinking unavoidable. Personalised pathways, intelligent tutoring, administrative efficiency, language translation, and improved feedback all hold promise — if they are guided by clear principles and sound pedagogy.

AI will continue to expand across education, whether we like it or not. The question is whether we allow it to flatten learning or use it to deepen it. The future won’t be shaped by how tightly we control the tools, but by how thoughtfully we design for thinking.

It will be a fun ride — but only if we’re deliberate about where we’re going.

Comments